It started like a dream: one AI model, 500 pages, full automation.

Then the hallucinations began.

A 500-person conference ballooned to 50,000 attendees. London hotels appeared in Poland. Finance events became “cybersecurity” summits.

Approval rate: 42%.

We couldn’t ship it as manual fixes would defeat the whole automation ROI. We didn’t need AI that sounded confident. We needed AI that was scared of being wrong.

So we built AI that doubts itself.

One week later, the same system hit 95% approval rate - at just $0.10 per page and zero human writers. We did it not by making AI smarter, but by making it paranoid.

See the event pages we built →

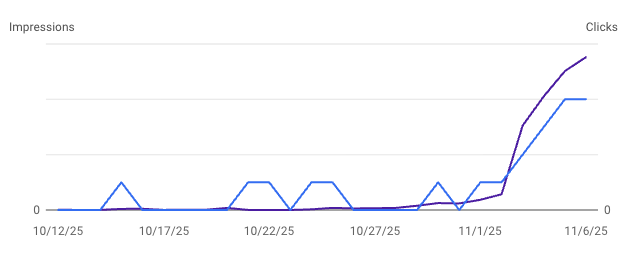

The system now generates hundreds of event pages automatically. Here’s what the performance looks like:

The Moment We Realized Prompts Weren’t the Problem

We started where everyone starts: GPT-4, carefully crafted instructions, one-shot generation. Give it the name of a conference like “RSA Conference”, get back a complete landing page with 20+ fields: attendee, speaker and sponsor counts, personas attending, topics covered, FAQs about the event, SEO metadata, the works.

The AI would confidently generate content that sounded right with professional tone, plausible numbers, proper formatting. But dig into the details and you’d find:

- Attendee numbers inflated 10x (“25,000” when the actual number was 2,500)

- Made-up venues that don’t exist (“Grand Ballroom at Marriott Conference Center”)

- Wrong event categories (retail tech summit → “fintech” because one session covered payments)

- SEO titles that violated Google’s character limits

- Geographic nonsense (venue in one country, city in another)

One model can’t be researcher, writer, and fact-checker at once. LLMs optimize for plausibility, not truth. They’ll generate content that looks professional while hallucinating half the facts. No amount of prompt engineering fixes that fundamental problem.

We needed a different architecture.

The Breakthrough: Make AI Skeptical

Instead of asking one agent to be perfect, we built three agents that don’t trust each other:

Agent 1: Research - “Just get the data, I don’t care if it’s pretty”

- Scrapes 3-5 key pages from the event website

- Pulls stats, dates, topics, personas (raw and unfiltered)

- Uses Google Search when website scraping comes up empty or an important info is missing

- Cost: ~$0.10-0.30 per event (the bulk of our budget)

Agent 2: Build - “Just structure it, don’t worry if it’s accurate”

- Takes messy research notes and organizes them into clean JSON

- Formats numbers for marketing (619 attendees → “600+”)

- Strips tracking junk from URLs

- Trusts Research Agent completely

- Cost: ~$0.01 per event

Agent 3: QA - “I trust nobody, catch everything”

- Runs 50+ validation rules on every page

- Checks data accuracy, brand guidelines, SEO compliance

- Uses a second LLM to catch nuanced errors rules can’t spot

- Rejects anything suspicious

- Cost: ~$0.02-0.10 per event

QA Agent rejects 30% of outputs. Not because the system is broken, but because that’s exactly what it should do. We argued about this for two weeks. Our first instinct was to “fix” the rejection rate. Then we realized: the rejections are the system working. Paranoia is the feature, not the bug.

What the QA Agent Actually Catches

The “that’s impossible” catches: We found an event claiming 2,000 exhibitor companies but only 1,500 attendees. QA Agent flagged it immediately: “Each company needs at least one person. This is physically impossible.” Another event had 500 speakers at a 400-person conference (more speakers than audience). Auto-rejected. The validation uses B2B event industry benchmarks: 2-5 attendees per company, 10-50 attendees per speaker. When the ratios are off, something’s wrong.

The “you’re confusing users” catches:

A finance event got labeled “cybersecurity” because one session covered fraud prevention. The semantic validator caught it: “This is accounts payable automation, not security. One fraud topic doesn’t change that. Correct category: fintech.”

Why it matters: Users filter by category. Finance pros looking for AP events won’t check “cybersecurity.” Wrong category = invisible to your target audience.

The “this will hurt SEO” catches: An event in October 2026 got SEO metadata referencing “2025.” QA caught the year mismatch and forced regeneration with consistent dates. Meta descriptions were averaging 180 characters when Google truncates at 155. All auto-flagged, all fixed before going live.

The “wait, what?” catches: One event got assigned “InterContinental London” as the venue but “Kraków, Poland” as the city. The AI had scraped past venue information (London) and mixed it with the current location (Kraków). We laughed when we first saw it, then realized if we’d shipped that, users wouldn’t laugh. They’d leave. Geographic mistakes destroy trust instantly.

The Feedback Loop: How Agents Improve Each Other

When QA Agent rejects an event, it doesn’t just say “this is bad.” It parses the specific errors and tells Research Agent what to fix.

→ Iteration 1: Full research

- 60% pass on first try

- QA provides feedback: “Missing speakers count, wrong category, need state field”

→ Iteration 2: Targeted regeneration

- Research Agent retries, but smarter

- Reuses the cached website scrape (saves time and money)

- Only regenerates the failing parts (category, speakers, state)

- 25% more pass (85% total)

- Cost: ~$0.02 per event (only fixing specific issues)

→ Iteration 3: Last chance

- Research Agent in “CRITICAL mode” (maximum effort)

- 5% more pass (90% total)

- Cost: ~$0.01 per event

Even with multiple attempts, average cost stays consistent because most events pass on first or second try. Iteration, not perfection.

Why This Matters for Marketing Teams

When you build systems like this, your job shifts from producing content to engineering consistency. You’re no longer managing writers. You’re managing accuracy thresholds, iteration speed, and model cost. Instead of hiring more people to scale, you’re tuning validation rules, adjusting quality gates, and teaching agents to spot patterns you care about. The skill becomes systems thinking, not team management.

What We Learned (The Hard Way)

Separate the jobs, not the models. Don’t ask one AI to research, write, and fact-check. Split the work. Research extracts data. Build formats it. QA validates it. The moment we split roles, error rates halved. We almost built a single “super prompt.” Would’ve kept us stuck at 42% forever.

Iteration beats perfection. Your first attempt will fail 30-40%. Build the correction loop: QA → parse errors → targeted fix → repeat. We almost killed the project at 42% approval. “Just hire writers,” someone said. Then iteration 2 hit 85%. The system needed room to improve itself. Stop chasing 100%. The cost of 90% to 95% is massive, the value tiny.

Structured data unlocks bulk magic. Week 2, we fixed 500 SEO titles in 2 hours for $10. Mid-project, we added custom FAQs to every event for another $10. Output as JSON, not HTML. You can bulk-update hundreds of pages when requirements change. Try that with manually-written content.

Make quality measurable. Every page gets a 0-100 score. Clear thresholds: 90+% auto-approve, 70-89% manual review, <70% reject. This lets you prove ROI and track improvements. You can’t manage what you can’t measure.

Use cheap models aggressively. Validation doesn’t need GPT-4. Gemini Flash Lite works just as well at 1/10th the cost. Match model power to task complexity. Use expensive models only where you need them.

The real ROI is scalability. Yes, we went from $25k-50k (manual) to $50 (automated). But the real unlock: add 1,000 more events tomorrow? Just run the script. Event changes next year? Re-run the agent and refresh all 500 pages automatically. Bulk-update when requirements change: 2 hours, not 2 months.

When This Architecture Makes Sense

Use it when:

- You need 100+ assets (setup takes time)

- Quality matters (can’t ship hallucinated data)

- You’ll iterate (content requirements will change)

- Data is structured (not freeform creative prose)

Don’t use it for:

- 5-10 one-off pieces (just use ChatGPT)

- Freeform creative content (poetry, storytelling)

- Content that won’t change (no iteration needed)

The Takeaway

Marketing automation needs systems that catch their own lies. Drop a CSV, and the agents scrape, validate, and publish automatically. No human in the loop. That’s production-ready, not an LLM demo.

The future isn’t smarter prompts. It’s systems that doubt themselves before your customers ever can.

We Now Help Other Teams Do This

What started as an internal project has turned into consulting work helping companies design and deploy multi-agent systems across marketing, data, research, and operations. Whether it’s validating content, automating enrichment, or building autonomous workflows, the playbook is the same: small, specialized agents that check each other’s work.

I help teams design and deploy agent workflows across marketing, data, and operations. If you want to test a use case or pressure-test your first architecture, reach out.